What is Java thread priority?

In our introduction to thread priority, we pointed out

some problems with the system, notably differences between what priority

actually means on different systems. Here, we'll look at how thread priority is actually

implemented in Windows and Linux.

Windows priorities

In the Hotspot VM for Windows, setPriority() map to Windows

relative priorities (set by the SetThreadPriority() API call).

The actual mappings were changed between Java 5 and Java 6 as follows:

Java to Windows priority mappings

| Java Priority | Windows Priority (Java 5) | Windows Priority (Java 6) |

|---|

| 1 | THREAD_PRIORITY_LOWEST |

| 2 |

| 3 | THREAD_PRIORITY_BELOW_NORMAL |

| 4 |

| 5 | THREAD_PRIORITY_NORMAL | THREAD_PRIORITY_NORMAL |

| 6 | THREAD_PRIORITY_ABOVE_NORMAL |

| 7 | THREAD_PRIORITY_ABOVE_NORMAL |

| 8 | THREAD_PRIORITY_HIGHEST |

| 9 | THREAD_PRIORITY_HIGHEST |

| 10 | THREAD_PRIORITY_TIME_CRITICAL |

Figure 1. Approximate CPU allocation against thread priority under Windows XP

on a uniprocessor machine.

Results for Vista on a dual core machine are very similar.

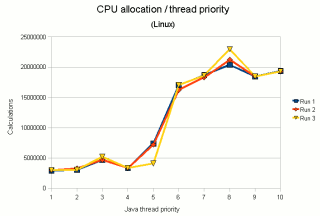

Figure 2. Approximate CPU allocation against thread priority on a uniprocessor

machine under Debian Linux (kernel 2.6.18).

In effect, the priority mappings were 'shifted down' to avoid THREAD_PRIORITY_TIME_CRITICAL,

apparently following reports that this setting could affect vital processes such as audio.

Note that there is really no such mapping to THREAD_PRIORITY_IDLE as

suggested by Oaks & Wong (they were possibly misinterpreting

a dummy value that appears at the beginning of the priority mapping table in the VM source code).

On Windows, thread priority is a key element in deciding which thread gets scheduled,

although the overall priority of a thread is a combination of the process's priority class,

the thread's relative priority (values from the table above), plus any temporary "boost"

given in specific circumstances (such as on returning from a wait on I/O). But in general:

Lower-priority threads are given CPU when all higher priority threads are

waiting (or otherwise unable to run) at that given moment.

The actual proprtion of CPU allotted to a thread therefore depends on how often that situation

occurs— there's no relation per se between priority and CPU allocation.

Now of course, if this was literally the be-all and end-all to thread scheduling, then

there'd quite possibly be lower-priority threads that barely got any CPU at all, being

continually starved by higher-priority threads that needed CPU. So Windows has a

fallback mechanism, whereby a thread that hasn't run for a long time is given

a temporary priority boost. (For more details about some of the points mentioned

here, see the section on thread scheduling.)

What this generally means is that on Windows:

Thread priority isn't very meaningful when

all threads are competing for CPU.

As an illustration, Figure 1 opposite shows the results of an experiment in which

ten threads are run concurrently, one thread with each Java priority. Each thread sits

in a CPU-intensive loop (continually a random number using a XORShift generator). Each

thread keeps a count of how many numbers it has generated1.

After a certain period (60 seconds in this case), all threads are told to stop and

queried to find out how many numbers they generated; the number generated is

taken as an indication of the CPU time allocated to that thread2.

As can be seen, thread priorities 1-8 end up with a practically equal share of the CPU,

whilst priorities 9 and 10 get a vastly greater share (though with essentially

no difference between 9 and 10). The version tested was Java 6 Update 10.

For what it's worth, I repeated the experiment on a dual core machine running Vista, and the shape

of the resulting graph is the same. My best guess for the special behaviour of priorities

9 and 10 is that THREAD_PRIORITY_HIGHEST in a foreground window has just enough

priority for certain other special treatment by the scheduler to kick in (for example, threads of

internal priority 14 and above have their full quantum replenished after a wait, whereas

lower priorities have them reduced by 1).

Java thread priority to nice value mappings in Linux

| Java thread priority | Linux nice value |

|---|

| 1 | 4 |

| 2 | 3 |

| 3 | 2 |

| 4 | 1 |

| 5 | 0 |

| 6 | -1 |

| 7 | -2 |

| 8 | -3 |

| 9 | -4 |

| 10 | -5 |

Linux priorities

Under Linux, you have to go through more hoops to get thread priorities to function

at all, although in the end, they may be more useful than under Windows. In Linux:

- thread priorities only work as of Java 6 onwards;

- for them to work, you must be running as root (or with root privileges via setuid);

- the JVM parameter -XX:UseThreadPriorities must be included.

The rationale behind requiring root privileges to alter thread priorities largely eludes me. Whether or not

Linux itself generally should place such a restriction on changing nice values is arguable, bit since it doesn't,

it seems odd to add it to the JVM (as opposed to, say, building in a restriction

via the Java SecurityManager). And does anyone really run, say, their web server as root?

Assuming you go through these steps to enable them,

Java thread priorities in Hotspot map to nice values. Unlike Windows

priorities, Linux nice values are used as a target for CPU allocation (although

like Windows, recent versions of Linux— from kernel 2.6.8 onwards— also apply

various heuristics to temporarily boost or penalise threads). The mappings from Java priorities

to Linux nice values are given in the table opposite.

Note that:

- nice value means "how nice the thread is to other threads", so a lower number means

higher priority;

- Java doesn't actually map to the full range (nice values go from -20 to 19), probably

to prevent negative impact on system threads.

Figure 2 shows the results of the thread priority experiment repeated under Linux

with kernel 2.6.18. The different coloured traces simply represent 3 separate runs of the experiment.

The graph shows that there is a correlation between Java priority (nice value) and CPU allocation,

although it is far from linear.

Solaris priorities

Sun have published more detailed information on

Solaris thread priorities.

1. To reduce the number of memory writes, each thread actually incremented the counter

when the bottom 7 bits of the random number generated were all set. However, this should not

affect the test, since millions of numbers were generated by each thread, and the bits of numbers

generated by XORShift are generally considered equally random.

2. For validation purposes, each thread also recorded its elapsed CPU time as reported

by ThreadMXBean. The corresponding graphs have essentially the same shape, but on the machines

tested on, the granularity of measurements using the count method is actually better.

If you enjoy this Java programming article, please share with friends and colleagues. Follow the author on Twitter for the latest news and rants.

Editorial page content written by Neil Coffey. Copyright © Javamex UK 2021. All rights reserved.